Recently I have had to perform some debug/analysis on an unusual case of clients receiving timeouts on a critical WCF service in a production environment. I use the term “unusual” to describe the timeouts due to the nature in which these timeouts occur combined with the operation behaviour of the service when they are occurring. That and the timeouts are extremely severe, every client experiences timeouts, in my experience with WCF is not an expected operational behaviour of the WCF stack. Under normal high load scenarios a percentage of clients will experience timeouts or receive the expected

Server To Busy fault when the WCF service has breached its configured throttle values.

In this specific case knowing the operation capabilities of the WCF service in question I knew that the amount of load on the server although high was well within the capacity of the service in question. Nor was the load significant enough to breach the configured service throttles. I eventually got to the bottom of the issue through debug and crash dump analysis. It was an unexpected/undocumented behaviour of the WCF stack coupled/influenced by a specific behaviour of a custom

UserNamePasswordValidator used by the WCF service.

I thought it would be worth describing the problem in more detail in the hope it might help others who experience WCF Service performance issues, that done seem to "make sense", giving one additional diagnostic check to ad to the toolbox.

Symptoms

The symptoms of the service outage are as follows:

- A high percentage of clients, > 90%, receive timeouts or Server To Busy exceptions

- The Instances service performance Counter is at or close to the the configured or defaulted ServiceThrottlingElement.MaxConcurrentInstances value

- The Calls Per Second Rate service performance counter is low or at 0 for periods of time

- If the service is deployed in an IIS app pool the ASP.NET Application Performance Counter Pipeline Instance Count will be at or close to its configured or defaulted value

- The Security Validation And Authentication Failures performance counter can be non zero

- The service appears to be deadlocked or being starved of requests, the service is not writing log files or updating database etc. but clients experience timeouts or very slow reponses

- Very low CPU utilisation by the process in question

Cause

After generating a process memory dump, from the WCF Service. After examining all of the threads stacks by executing the

EEStack command it was clearly evident that most of the threads in the process where blocked on the unmanaged windows API method

GetQueuedCompletionStatus which is a blocking I/O call. One call was blocked on the

Validate method of the

UserNamePasswordValidator.

It transpires that the the Validate method of the custom

UserNamePasswordValidator used by the WCF service, talks to a proprietary back office system to validate a clients username and password. To prevent a client from brute forcing a users password it will delay the username/password validation response for 5 seconds after the nth unsuccessful attempt. This has very serious consequences for the throughput of the WCF service.

Demonstration Code And Results

To demonstrate this problem I have put together a simple demo base on one of the WCF sample applications. It is the calculator application that implements a simple custom UserNamePasswordValidator. The code itself is not really the interesting part and is pretty trivial, the intent is to demonstrate the symptoms.

Setup

Run the Setup.Bat file in the .\UserNamePasswordValidator\CS folder.

Note you should run this from a VS2010 command prompt with administrative privileges. As it creates a test certificate and grants access to the private key for encryption/signing the message credentials.

Clean-up

Run the CleanUp.bat file in the .\UserNamePasswordValidator\CS folder.

Note you should run this from a VS2010 command prompt with administrative privileges. AS it deletes the test certificate created by setup.bat

Open the solution in VS2010 running as a administrator as the code attempts to register a http.sys listener on port 8001.

There are a number of application settings that can be used to modify the behaviour of the client and service code.

In the

LoadTestClient there are the following settings:

<applicationSettings>

<LoadTestClient.Properties.Settings>

<!-- The number of tasks to create with valid passwords -->

<setting name="NumberTasksValidPassword" serializeAs="String">

<value>10</value>

</setting>

<!-- The number of tasks to create with invalid passwords -->

<setting name="NumberTasksInvalidPassword" serializeAs="String">

<value>2</value>

</setting>

</LoadTestClient.Properties.Settings>

</applicationSettings>

In the service there is one setting to configure the sleep time for invalid requests:

<applicationSettings>

<service.Properties.Settings>

<!-- The sleep time for a request with an invalid password -->

<setting name="InvalidCredentialSleep" serializeAs="String">

<value>1000</value>

</setting>

</service.Properties.Settings>

</applicationSettings>

Test Runs

To demonstrate the behaviour I have put together a few test cases, that vary each of the parameters of the test and show the results.

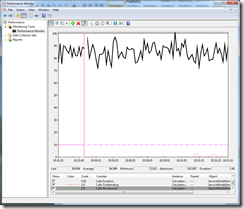

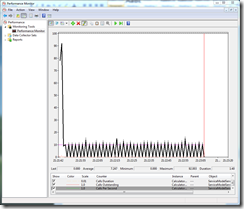

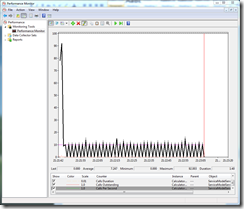

10 Tasks valid credentials , 0 Tasks invalid credentials

Form the graphs above we can see 10 service instances performing approximately 85 calls/second. The calls outstanding counter averages < 1. This is normal operation of the service.

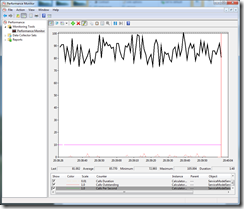

10 Tasks valid credentials , 2 Tasks invalid credentials, Invalid Credential sleep time 1 sec

As we can see can see from the above, the results are relatively similar.

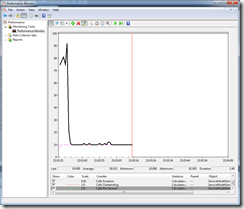

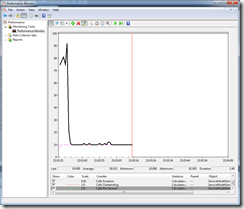

10 Tasks valid credentials , 3 Tasks invalid credentials, Invalid Credential sleep time 1 sec

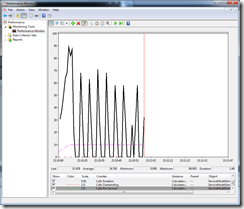

As we can see from the above we have reached a tipping point. When the number of clients making invalid requests increases to 3, we can see initially the service respond times and calls/second are ok. When the three client tasks with invalid passwords start the throughput of the service is severely throttled to approximately 10 calls/sec. I would also draw your attention to the

Calls Duration metric which is close to the other two runs.

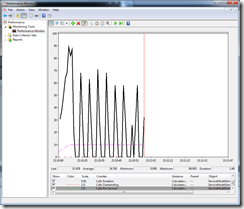

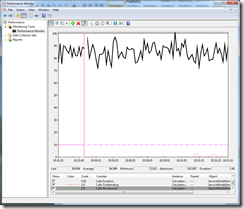

10 Tasks valid credentials , 2 Tasks invalid credentials, Invalid Credential sleep time 5 sec

When the number of clients making invalid requests is set to 2 but the sleep time is increased to 5 seconds we see a very erratic calls/second profile. Again the

Calls Duration metric at the service level is in line with the other tests, but if measured from the client we would see a higher Calls Duration. The

Calls Outstanding metric is also not zero more often than in the previous tests.

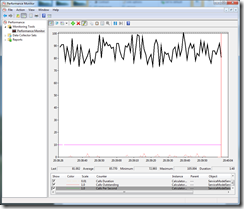

10 Tasks valid credentials , 4 Tasks invalid credentials, Invalid Credential sleep time 1 sec

When the number of clients making invalid requests is set to 4 with a sleep time of 1 seconds, we can see initially the service respond times and calls/second are ok. When the When the 4 client tasks with invalid passwords start the calls/second profile of the service is very erratic. Again the

Calls Duration metric at the service level is in line with the other tests.

In a real world environment it is clear that you will not see such clear patterns to the calls/second metric. It is more likely that the service will in fact not process any requests if the client invalid call pattern is more random and at a higher volume.

Crash Dump Analysis

If we take a crash dump of the WCF Service process while it is in the “Deadlocked/Blocked” state we can examine the processes threads.

0:000> !threads

ThreadCount: 12

UnstartedThread: 0

BackgroundThread: 11

PendingThread: 0

DeadThread: 0

Hosted Runtime: no

PreEmptive Lock

ID OSID ThreadOBJ State GC GC Alloc Context Domain Count APT Exception

0 1 16d0 0000000000205eb0 a020 Enabled 0000000000000000:0000000000000000 00000000001f9780 1 MTA

2 2 184c 000000000020c1f0 b220 Enabled 0000000000000000:0000000000000000 00000000001f9780 0 MTA (Finalizer)

6 a 1708 000000001b9df9d0 a009220 Enabled 0000000000000000:0000000000000000 00000000001f9780 0 MTA (Threadpool Completion Port)

8 6 1ae8 000000001b927570 a009220 Enabled 0000000000000000:0000000000000000 00000000001f9780 0 MTA (Threadpool Completion Port)

9 4 19fc 000000001b926cf0 8009220 Enabled 0000000000000000:0000000000000000 00000000001f9780 0 MTA (Threadpool Completion Port)

10 8 1958 000000001b9e1e40 8009220 Enabled 0000000000000000:0000000000000000 00000000001f9780 0 MTA (Threadpool Completion Port)

11 b e84 000000001b9e6c60 8009220 Enabled 00000000033bb8c0:00000000033bd178 00000000001f9780 0 MTA (Threadpool Completion Port)13 c e18 000000001b9f2260 8009220 Enabled 0000000000000000:0000000000000000 00000000001f9780 0 MTA (Threadpool Completion Port)

14 5 1a58 000000001b90c4a0 8009220 Enabled 0000000000000000:0000000000000000 00000000001f9780 0 MTA (Threadpool Completion Port)

17 9 728 000000001af1f7c0 8009220 Enabled 0000000000000000:0000000000000000 00000000001f9780 0 MTA (Threadpool Completion Port)

18 d 1aec 000000001af1ef40 8009220 Enabled 0000000000000000:0000000000000000 00000000001f9780 0 MTA (Threadpool Completion Port)

19 3 1be0 000000001aed7e00 8009220 Enabled 0000000000000000:0000000000000000 00000000001f9780 0 MTA (Threadpool Completion Port)

We can see that all of the threads are running but the Exception column which lists the last thrown exception (if any) for the thread, shows (ThreadPool Completion Port). We can exclude a few of the threads from inspection, for example thread 0 is the thread executing the Microsoft.ServiceModel.Samples.CalculatorService.Main() method. Thread 2 is the CLR Finalizer thread.

If we look at a few of the other threads call stacks, shortened for brevity, we see the following:

---------------------------------------------

Thread 17

Current frame: ntdll!NtRemoveIoCompletion+0xa

Child-SP RetAddr Caller, Callee

000000001d5deb40 000007fefdf616ad KERNELBASE!GetQueuedCompletionStatus+0x39, calling ntdll!ZwRemoveIoCompletion

000000001d5deb50 000007fef4d46b1c clr!GCHolderBase<1,0,0,1>::Pop+0x2c, calling clr!Thread::EnablePreemptiveGC

000000001d5deb80 000007fef4e309ff clr!Thread::InternalReset+0x140, calling clr!Thread::SetBackground

000000001d5deba0 00000000777aa4e1 kernel32!GetQueuedCompletionStatusStub+0x11, calling kernel32!GetQueuedCompletionStatus

000000001d5debb0 000007fef4d7dd49 clr!Thread::LeaveRuntime+0x9, calling clr!Thread::LeaveRuntimeNoThrow

000000001d5debe0 000007fef4f1eb2f clr!ThreadpoolMgr::CompletionPortThreadStart+0x113, calling kernel32!GetQueuedCompletionStatusStub

000000001d5dec50 000007fef4d46a14 clr!operator delete+0x45, calling kernel32!GetLastErrorStub---------------------------------------------

Thread 18

Current frame: ntdll!NtRemoveIoCompletion+0xa

Child-SP RetAddr Caller, Callee

000000001ce7ec10 000007fefdf616ad KERNELBASE!GetQueuedCompletionStatus+0x39, calling ntdll!ZwRemoveIoCompletion

000000001ce7ec20 000007fef4d46b1c clr!GCHolderBase<1,0,0,1>::Pop+0x2c, calling clr!Thread::EnablePreemptiveGC

000000001ce7ec50 000007fef4e309ff clr!Thread::InternalReset+0x140, calling clr!Thread::SetBackground

000000001ce7ec70 00000000777aa4e1 kernel32!GetQueuedCompletionStatusStub+0x11, calling kernel32!GetQueuedCompletionStatus

000000001ce7ec80 000007fef4d7dd49 clr!Thread::LeaveRuntime+0x9, calling clr!Thread::LeaveRuntimeNoThrow

000000001ce7ecb0 000007fef4f1eb2f clr!ThreadpoolMgr::CompletionPortThreadStart+0x113, calling kernel32!GetQueuedCompletionStatusStub

000000001ce7ed20 000007fef4d46a14 clr!operator delete+0x45, calling kernel32!GetLastErrorStub

As mentioned earlier

GetQueuedCompletionStatus is a blocking operation.

Looking at Thread 8 we can see that it is the only thread executing the

Validate method of the

CustomUsernameValidator.

Thread 8

Current frame: ntdll!NtDelayExecution+0xa

Child-SP RetAddr Caller, Callee

000000001c4cd200 000007fefdf61203 KERNELBASE!SleepEx+0xab, calling ntdll!ZwDelayExecution

000000001c4cd220 000007fee14f2f9a (MethodDesc 000007fee148ffb0 +0x4a System.Xml.XmlBufferReader.GetString(Int32, Int32)), calling (MethodDesc 000007fef39e5540 +0 System.String.CtorCharArrayStartLength(Char[], Int32, Int32))

000000001c4cd270 000007fefdf6358b KERNELBASE!SleepEx+0x12d, calling ntdll!RtlActivateActivationContextUnsafeFast

000000001c4cd2a0 000007fef4d76455 clr!EESleepEx+0x22, calling kernel32!SleepExStub

000000001c4cd2d0 000007fef4f33fba clr!Thread::UserSleep+0x97

000000001c4cd310 000007fee14e95bf (MethodDesc 000007fee1470360 +0x2f System.Xml.XmlBaseReader.get_Value()), calling clr!JIT_WriteBarrier_Fast

000000001c4cd320 000007fef4f3410c clr!ThreadNative::Sleep+0x100, calling clr!Thread::UserSleep

000000001c4cd3d0 000007fee166fca5 (MethodDesc 000007fee148f5e8 +0x85 System.Xml.XmlBaseReader.ReadElementString())

000000001c4cd3e0 000007fee167ed39 (MethodDesc 000007fee148d818 +0x19 System.Xml.XmlDictionaryReader.ReadStartElement(System.Xml.XmlDictionaryString, System.Xml.XmlDictionaryString))

000000001c4cd3e8 000007ff00160be6 (MethodDesc 000007ff001b4f98 +0x116 Microsoft.ServiceModel.Samples.CalculatorService+CustomUserNameValidator.Validate(System.String, System.String)), calling (MethodDesc 000007fef3a5cd98 +0 System.Threading.Thread.Sleep(Int32))

Summary

In my opinion it is evident from the test results and the stack trace analysis presented in this post that there is some inherent "queuing/pipelining" in the processing of messages with credentials. Whether or not this is due to the fact that a custom

UserNamePasswordValidator is being used is unclear. It is also evident that this “pipeline” is sensitive to execution latency of the Validate method of the

UserNamePasswordValidator, possibly as a side effect of being a shallow queue.

Either a number of requests of moderate latency or a smaller number of requests with high latency can have a impact on the overall performance of the service. It is therefore something to be aware of when implementing custom

UserNamePasswordValidator types that perform credential validation that may be subject to non trivial execution latency either due to I/O or CPU.

In my opinion this behaviour could be exploited to create an effective DOS attack, as the attacker does not have to create a huge request load to affect the WCF services performance. In the specific case that I discovered in the production environment I modified the code to mitigate the problem while remaining secure.